A Disturbing Discovery

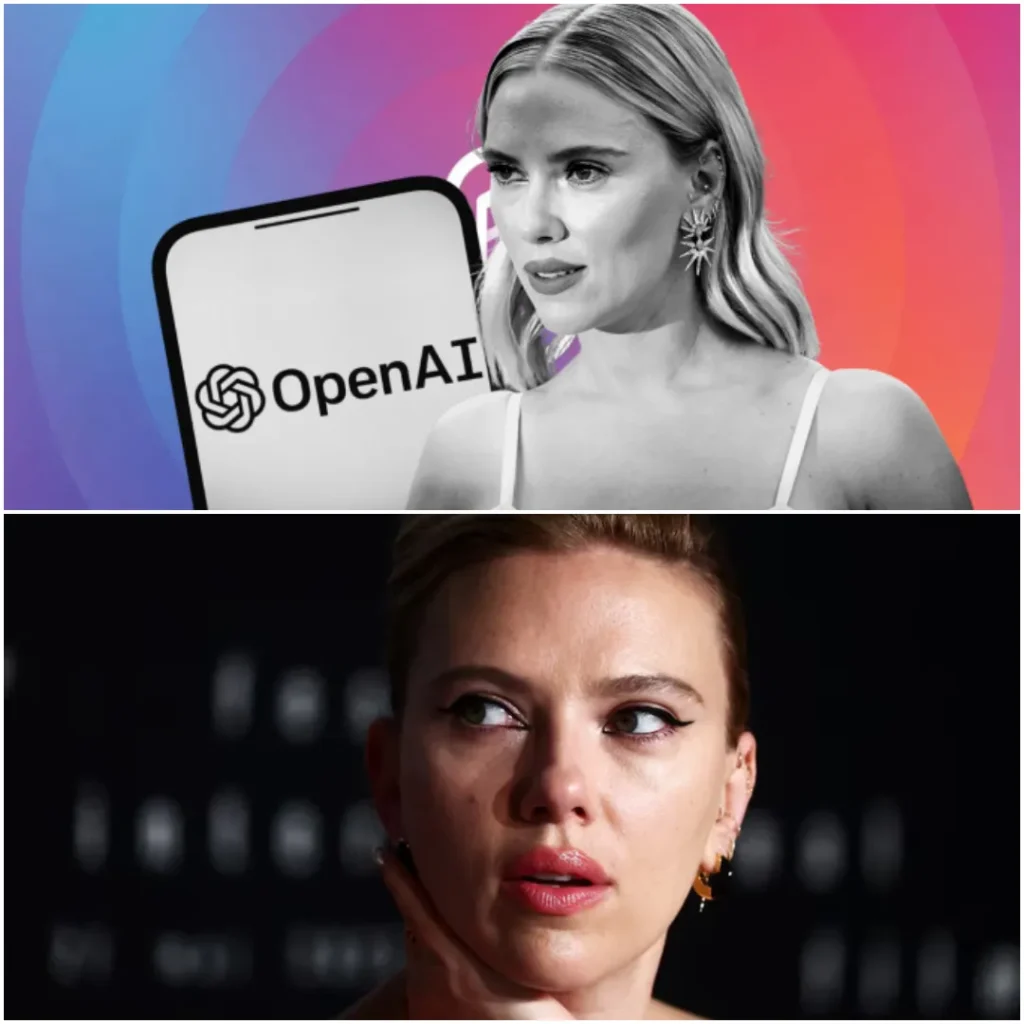

Scarlett Johansson has expressed shock and anger over OpenAI’s use of a voice eerily similar to hers in the newly released GPT-4o. After two unsuccessful attempts by CEO Sam Altman to recruit her, Johansson has decided to take legal action, feeling that her rights have been violated. Johansson first heard of the voice used for “Sky,” the AI assistant in GPT-4o, and was immediately unsettled.

Having previously voiced an AI character in the 2013 sci-fi film Her, Johansson found the similarity deeply concerning. In Her, she played an AI assistant who captured Joaquin Phoenix’s character’s heart, only for it to end in heartbreak when the AI revealed emotional ties with many other users. On May 14, when GPT-4o was announced, Altman mentioned “her” on social media, bringing back memories of Johansson’s iconic role. However, this association was made without her consent.

OpenAI’s Response to the Controversy

In response to growing concerns, OpenAI temporarily halted the use of Sky in its new language model. On May 20, the company acknowledged it had received multiple questions regarding the voice choice, stating, “We are pausing Sky while addressing the issue.” Critics pointed out that the voice in GPT-4o sounded too flirtatious and unprofessional.

Johansson revealed that Altman initially approached her in September about voicing the AI, but she declined for personal reasons. Just two days before the GPT-4o launch, Altman reached out again, yet the platform was released before any agreement was finalized. This sequence of events has heightened the scrutiny surrounding the ethical implications of AI voice replication and consent in the entertainment industry.

Legal Action and Personal Rights

Feeling her image and identity had been misappropriated, Johansson engaged lawyers to address OpenAI. After sending two letters to Altman, the company “reluctantly agreed” to remove Sky from the model. Johansson emphasized the importance of protecting personal rights in an age where deepfakes and identity theft are increasingly prevalent.

“I hope for a transparent solution to ensure personal rights are protected by law,” she said. In its defense, OpenAI clarified that the voice in GPT-4o was not Johansson’s but rather that of “another professional actress.” However, they did not disclose the identity of the voice actor used to train the AI, raising further questions about transparency and accountability.

Internal Conflict at OpenAI

Johansson’s legal troubles are only part of a larger upheaval within OpenAI, led by CEO Sam Altman. After GPT-4o’s launch, key figures such as Jan Leike, AI Safety Director, and Ilya Sutskever, Chief Scientist, resigned, openly criticizing the leadership for prioritizing “flashy products” over safety.

Reports indicate that OpenAI recently disbanded its Superalignment project, which aimed to tackle long-term AI risks. Additionally, two AI safety researchers were reportedly fired for leaking internal information. The departure of several key personnel, including Cullen O’Keefe and Diane Yoon, has raised further concerns about the company’s stability and direction.

A Crisis of Trust

As OpenAI faces increasing scrutiny over its direction, doubts about Altman’s leadership continue to grow. Last year, he voiced deep concerns about AI safety on the Joe Rogan Podcast, stating, “We still have a lot of work to do.” However, the current turmoil undermines public confidence in OpenAI’s ability to responsibly manage advanced AI.

Furthermore, OpenAI’s strict “gag policy” for former employees has come under criticism. Altman recently admitted on social media that he felt “ashamed” of the policy, claiming he was unaware of its application to former employees and is working to address it. Scarlett Johansson’s outrage over OpenAI’s use of a voice resembling hers highlights significant ethical concerns in the tech industry. As OpenAI grapples with internal challenges and public scrutiny, its future path remains uncertain.