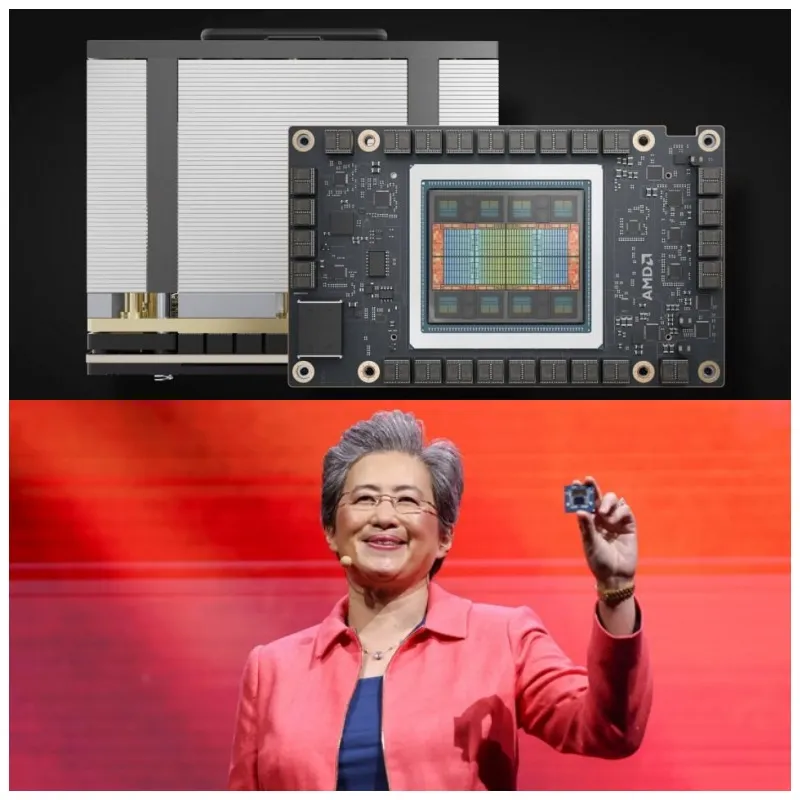

AMD has officially introduced its latest AI chip, the Instinct MI325X, designed to compete directly with Nvidia’s H200 in the growing AI data center market. This new graphics processor, revealed at the Advancing AI event in San Francisco on October 10, is aimed at AI training workloads and is seen as a strong contender in the highly competitive AI hardware industry.

The Instinct MI325X builds on the success of its predecessor, the MI300, launched in late 2023. One of the most significant upgrades in the MI325X is its enhanced VRAM capacity, which has been increased to 256 GB HBM3e from the MI300’s 192 GB HBM3. This expansion mirrors Nvidia’s strategy with its H200, which maintained the same computing power as earlier models but boosted memory capacity and bandwidth.

AMD’s approach is well-suited for AI chips that need to handle increasingly large workloads. More memory and faster processing speeds lead to better overall performance, which is essential for cloud providers that manage trillion-parameter models like OpenAI’s GPT-4. AMD’s decision to incorporate more high-bandwidth memory (HBM) allows for the deployment of massive models on fewer nodes, providing a potential advantage over Nvidia.

The MI325X offers a memory bandwidth increase to 6 TB/s, up from 5.3 TB/s in the MI300X. However, this performance boost comes with a trade-off—power consumption has jumped significantly from 250 watts to 1,000 watts. Despite this, AMD claims that the MI325X outperforms Nvidia’s H200 by 20% to 40% in inference performance when running models like Meta’s Llama 3.1, which includes both 70B (70 billion parameters) and 405B (405 billion parameters) versions.

AMD has announced that the Instinct MI325X will go into mass production in the fourth quarter of 2024. The chip will be compatible with systems from major manufacturers such as Dell, Eviden, Gigabyte, Hewlett Packard Enterprise, Lenovo, and Supermicro. In addition to the MI325X, AMD is planning to release a higher-capacity version, the MI355X, which will feature 288 GB HBM3e and will be available in 2025.

The MI325X is a significant step forward for AMD in its quest to challenge Nvidia’s dominance in the AI GPU market. Nvidia, led by CEO Jensen Huang, currently controls a substantial portion of this market. However, if cloud computing giants and AI developers begin to adopt AMD’s technology as a viable alternative, Nvidia could face significant competition.

At the product launch event, AMD made its ambitions clear. The company aims to capture a considerable share of the estimated $500 billion AI market by 2028. “The demand for AI continues to grow strongly and is actually exceeding expectations. It’s clear that the investment rate continues to grow everywhere,” said AMD CEO Lisa Su.

To maintain this momentum, AMD is accelerating its product release schedule, planning annual launches of new AI chips. While the MI300X only began shipping in late 2023, AMD is already working on its next-generation chips. The MI325X will be followed by the MI350 in 2025 and the MI400 in 2026, positioning AMD to keep pace with market demands and continue competing with Nvidia.

The introduction of AMD’s Instinct MI325X marks a significant milestone in the company’s effort to challenge Nvidia in the rapidly expanding AI chip market. With enhanced memory capacity, improved performance, and support from major tech manufacturers, AMD is poised to become a more formidable competitor. As the demand for AI solutions continues to rise, AMD’s strategy of rapid innovation and market expansion could help it secure a larger slice of the AI hardware market, intensifying competition with industry leader Nvidia.