“Explore how Google’s cutting-edge TPUs are reshaping the AI landscape, powering Apple’s models and its own Gemini chatbot, as the tech giant battles for supremacy in the AI chip race.”

At Google’s sprawling headquarters in Mountain View, California, a highly specialized lab hums with activity. Rows of server racks stretch across the facility, but their tasks go far beyond the typical duties of running Google’s vast search engine or handling the workloads of Google Cloud’s millions of customers. Instead, these servers are busy testing Google’s in-house microchips, known as Tensor Processing Units (TPUs).

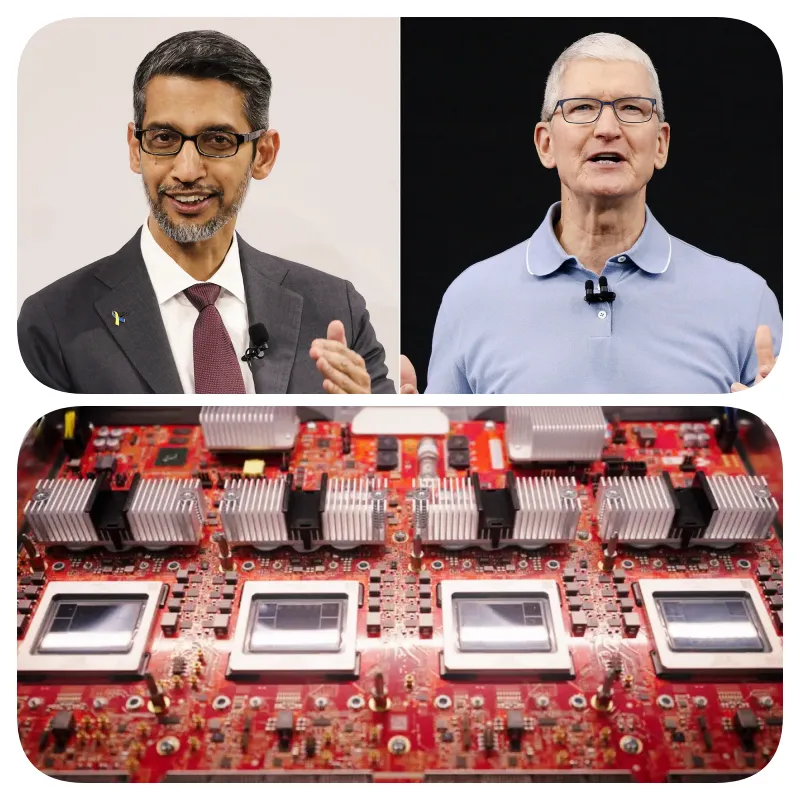

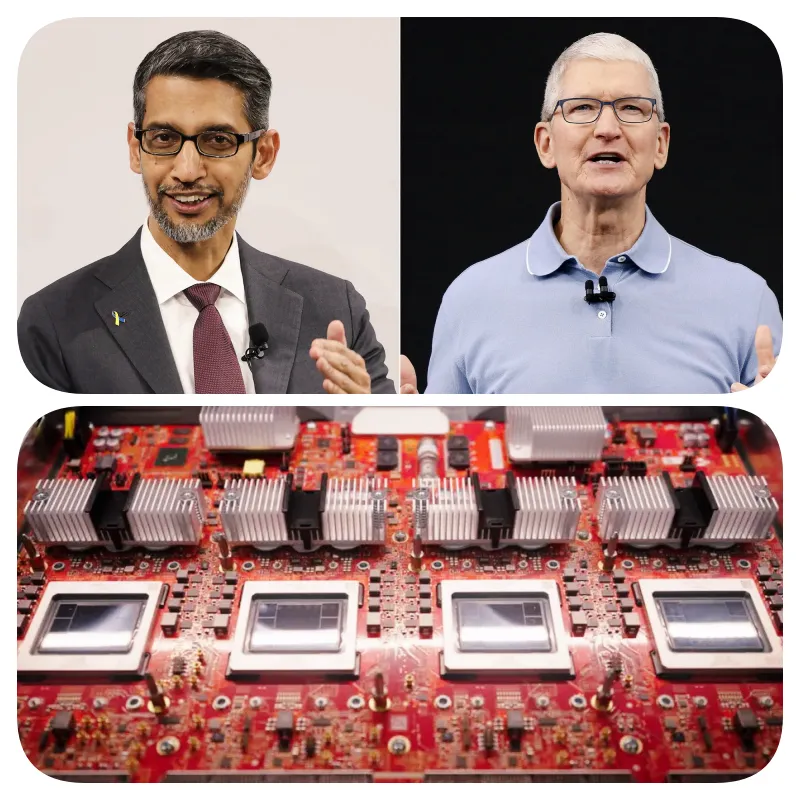

Initially designed to optimize Google’s internal workloads, TPUs have become a cornerstone of the company’s AI strategy. Available to cloud customers since 2018, these custom chips have recently been thrust into the spotlight with Apple revealing that it uses TPUs to train the AI models behind Apple Intelligence. Simultaneously, Google relies on these TPUs to power its own AI chatbot, Gemini.

Futurum Group CEO Daniel Newman notes, “The world sort of has this fundamental belief that all AI, especially large language models, are trained on Nvidia hardware. While Nvidia indeed dominates the market, Google has forged its own path with TPUs.” Since their debut in 2015, Google’s TPUs have marked the company as a pioneer in custom AI chips, a move that predated similar efforts by Amazon and Microsoft by several years.

Despite Google’s early lead in AI chip development, the company has faced challenges in the broader generative AI race. Criticism has been levied against Google for product missteps, and its Gemini chatbot arrived more than a year after OpenAI’s ChatGPT. However, Google Cloud has gained significant traction, bolstered by its AI offerings, with Alphabet reporting a 29% increase in cloud revenue in the most recent quarter, surpassing $10 billion for the first time.

This success, Newman argues, is partly due to the unique advantages provided by Google’s TPUs. “The AI cloud era has redefined how companies are perceived, and Google’s silicon differentiation with TPUs has played a crucial role in elevating the company’s position in the cloud market.”

The journey to create these groundbreaking chips began in 2014 with a simple thought experiment at Google: What would happen if users interacted with Google via voice for just 30 seconds each day? The answer revealed that Google would need to double its data center computing capacity, prompting the company to explore more efficient solutions. The result was the development of TPUs, custom hardware that performs AI tasks up to 100 times more efficiently than traditional general-purpose hardware.

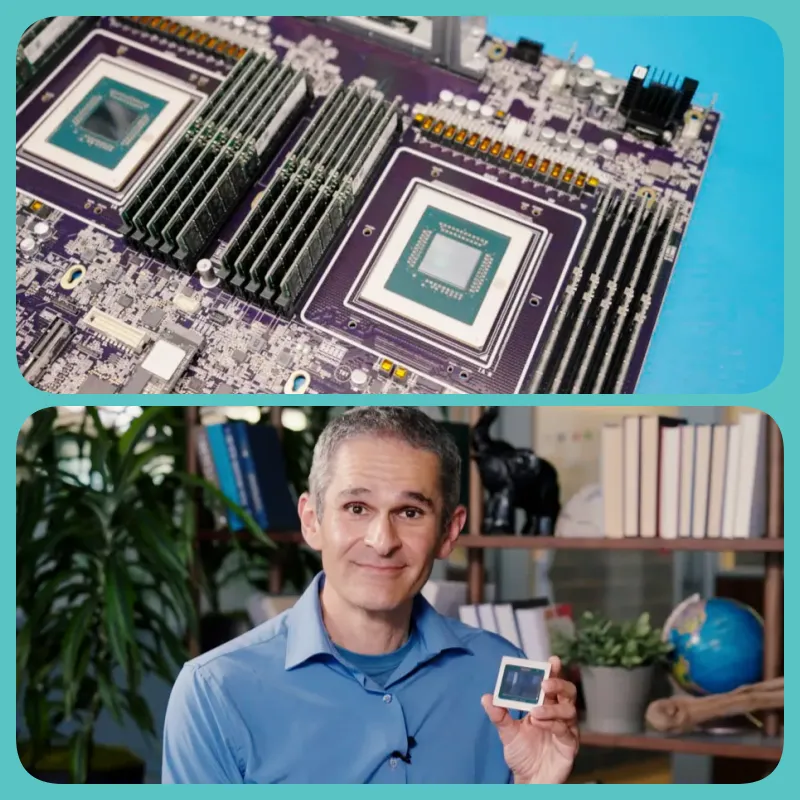

While Google’s data centers continue to rely on traditional CPUs and Nvidia GPUs, TPUs represent a different breed of chip known as application-specific integrated circuits (ASICs), designed for specialized tasks. The TPU, dedicated to AI, was the first of its kind and remains dominant in the market for custom cloud AI accelerators, holding a 58% market share according to The Futurum Group.

Google’s commitment to custom chip development extends beyond TPUs. The company also produces specialized chips for its devices, such as the Tensor G4 for the AI-enabled Pixel 9 and the A1 chip for Pixel Buds Pro 2. But it’s the TPU that truly sets Google apart, and the upcoming sixth-generation TPU, codenamed Trillium, is set to push the boundaries even further later this year.

Developing these chips is no small feat. Google collaborates with Broadcom, a chip developer also involved in creating AI chips for Meta, and relies on Taiwan Semiconductor Manufacturing Company (TSMC) for manufacturing. As geopolitical tensions rise, particularly between China and Taiwan, Google is mindful of the risks but remains hopeful that these contingencies won’t need to be activated.

As Google gears up to release its first general-purpose CPU, Axion, by the end of the year, the company is poised to further solidify its position in the AI chip market. Though late to the CPU game, Google’s focus on where it can deliver the most value has driven its innovations in TPUs and other custom hardware.

The AI revolution has not only reshaped the tech landscape but also brought to light the critical role of power efficiency and environmental sustainability. Google’s efforts to mitigate the environmental impact of its AI infrastructure, including innovative cooling methods for its TPUs, reflect the company’s commitment to responsible AI development.

Despite the challenges, from geopolitical risks to the demands of power and water, Google remains steadfast in its pursuit of AI excellence. As Amin Vahdat, head of custom cloud chips at Google, puts it, “I’ve never seen anything like this, and there’s no sign of it slowing down. Hardware is going to play a really important part in the future of AI.”